Metodology and Results

AIAIA workflows

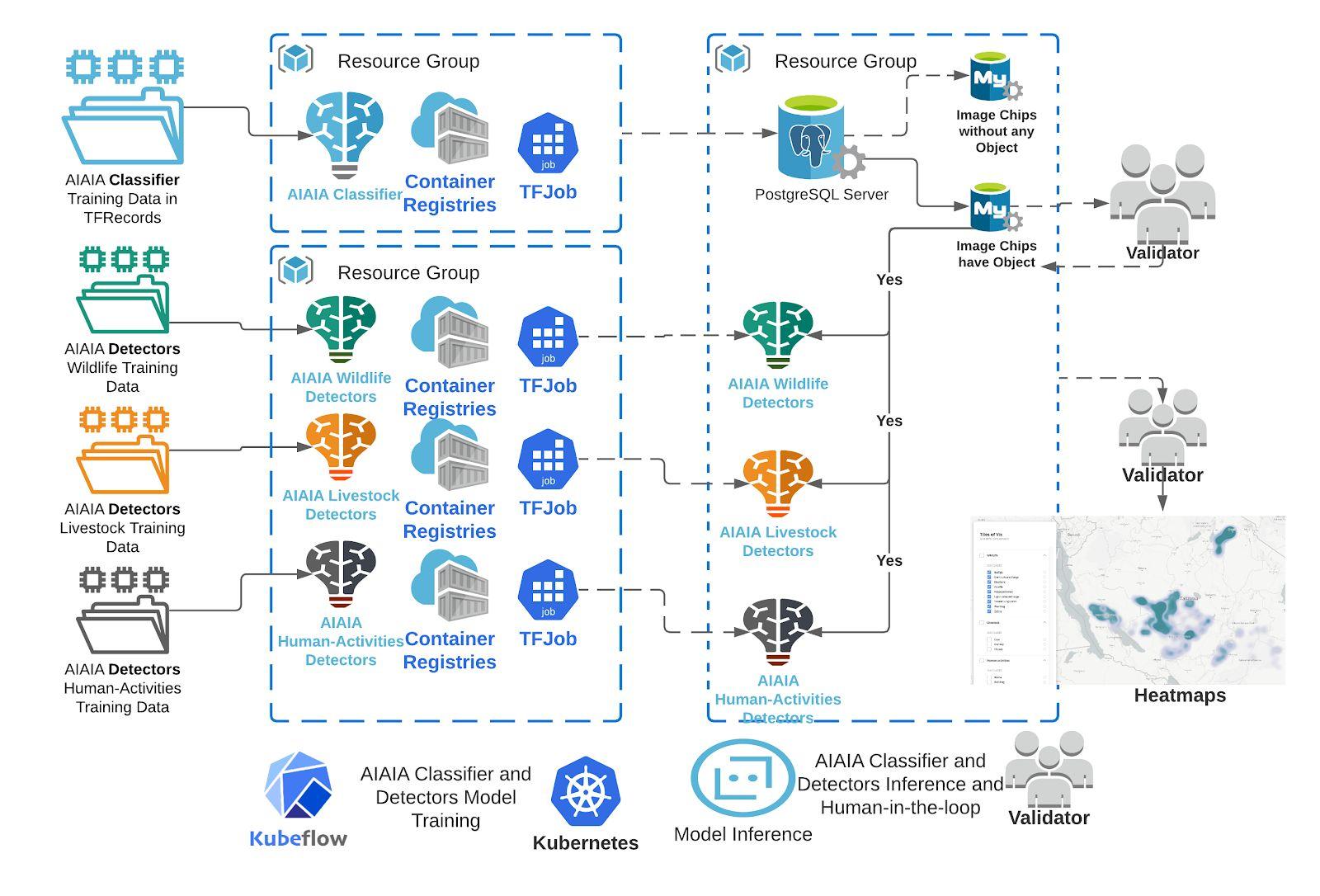

Two AI-assisted workflows were built in this study, an AIAIA Classifier and three AIAIA Detectors. The AIAIA classifier was applied to filter an image containing our “objects of interest”, either human and settlements, wildlife, livestock or the combinations of them. AIAIA Detectors were built on top of Object detection models (TensorFlow Object Detection API) were applied to detect wildlife species, humans and settlements, livestock species, and their counts, separately. The end-to-end workflow, including a classifier and three detectors, aims to reduce the costs of conducting game counts and human influence in wildlife conservation by 50%, enabling more frequent monitoring.

The AIAIA Classifier and Detectors were deployed on top of Kubeflow and Kubernetes that allow machine learning and cloud engineers to run model training and experimentations quickly and efficiently with TFJobs (Figure 6). Each model training and model experiment was recorded with TFJob YAML files, so it’s traceable. Once the best performing model is identified either for the classifier or detector with the model evaluation metrics. For the AIAIA Classifier, we used F1, precision and recall scores as well as an ROC curve from model evaluation over test dataset. To evaluate metrics for the detectors, we compute confusion matrices, F1 scores, mean average precision and recall scores.