Methodology

Sat-Xception and the school classifier

Selecting a machine learning framework

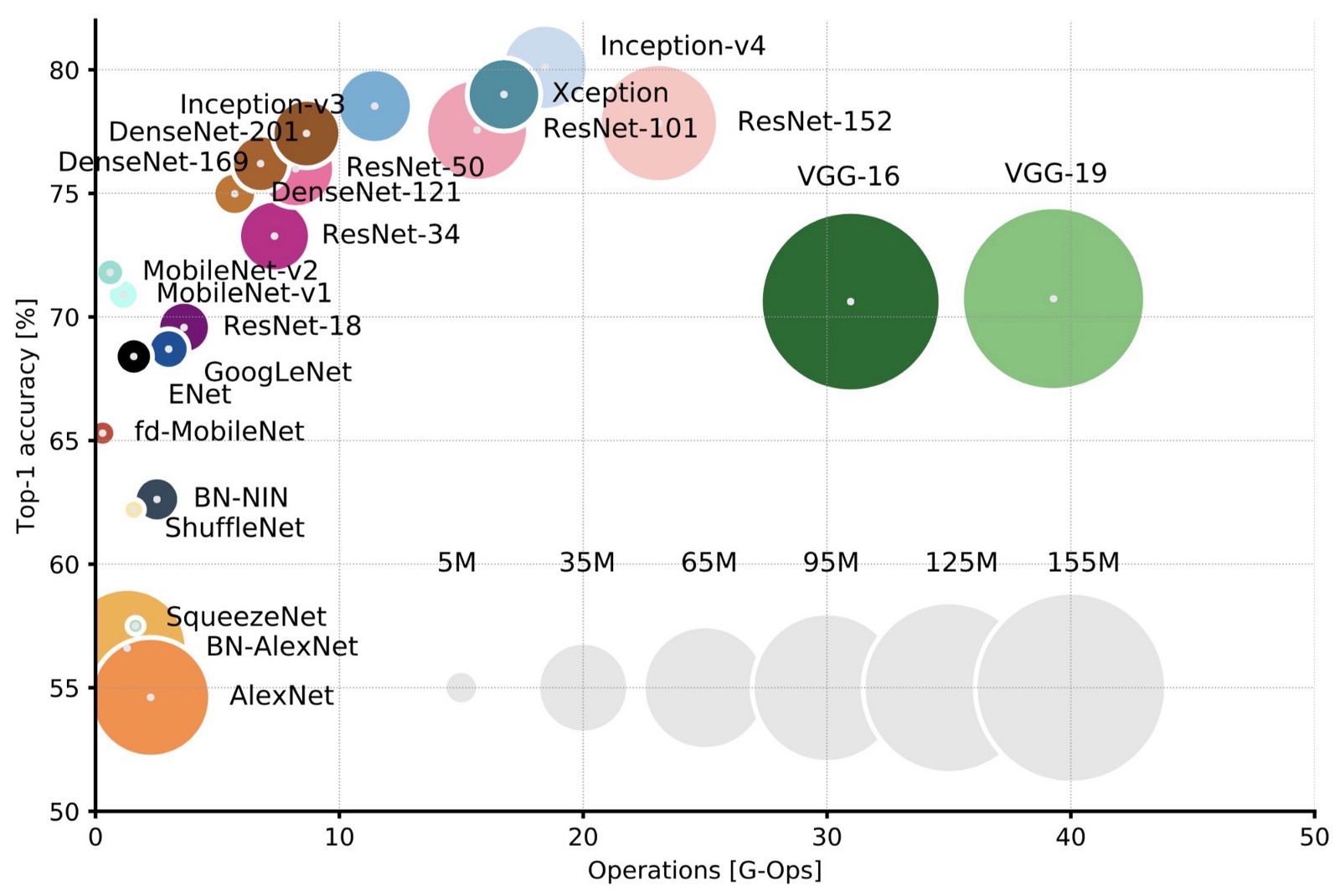

We did initial testing on two promising ML frameworks: Xception and MobileNetV2, two pre-trained models built in our Sat-Xception. Our preliminary testing showed slightly better results from Xception, so we selected Xception for the remainder of the project. However, MobileNetV2 only used a quarter of time per training iteration on exactly the same training set. For those without access to rich cloud computing resources, you might consider using MobileNetV2 to train the model instead.

Sat-Xception

To quickly train the school classifier, we create a deep learning python package called Sat-Xception. It is a deep learning package that utilizes pre-trained models from ImageNet. It currently private and we will make it open sourced soon. Xception and MobileNetV2 were two pre-trained models built-in in the package. The package is designed to quickly install, transfer-learn and fine-tune image classifiers with the built-in pre-trained models, which can be used to train other classifiers rather than just school classifier in this case. They were written by Keras, a high-level python package that can allow users to quickly reconstruct neural networks Google's Tensorflow was used as backend.

Xception is one of current state-of-the-art CNN architectures and pre-trained models on top of ImageNet. It’s a high performing and efficient network compared to other pre-trained networks. MobileNetV2 on the other hand, is a model that is slightly less accurate compared to Xception. However, it's a very light-weight, fast, and easy to tune when limited resources available (appropriate for scenarios with resource vs. accuracy tradeoff). Both Xception and MobileNetV2 have fewer hyper-parameter available to tune compared to other pre-trained models, e.g. VGG and Inception, but both are high-performed models.

Training a school classifier with sat-xception

To install sat-xception , transfer-learn and fine tune an image classification model, you need to:

-

set up an python environment using conda to create a virtual environment or use pyenv;

-

git clone this repo (will open source soon);

-

cd to main_model where the

sat-xceptioncore script is located; -

run

pip3 install -e .orpip install -e .

We organize the training dataset in such a order:

└── main_model/

├── train/

├── not-school

├── school

└── valid/

├── not-school

├── school

└── test/

├── not-school

├── school

To train a school classifier, or any other image classification, you only need to install the Sat_Xception and run train with the selected models. For instance, to train a school classifier with MobileNetV2 you can run:

sat_xception train -model=mobilenetv2 -train=train -valid=valid

School classifier and model performance

We broke the training sessions into two sessions. The first session was designed to test the feasibility of using Sat-Xception to train a well-performed school classifier in Colombia. The model was over-confident in rural Colombia in the first session, leading to too many false predictions in the area. To overcome the issue, the expert mapper team created a new training dataset that was slightly different from the training dataset in the first session. In the second training session, 2,048 ‘not-school’ buildings were added. In addition, for the “school” category, we only kept rural schools that have very clear school features. We also randomly selected another 2,500 confirmed school tiles to add to the category.

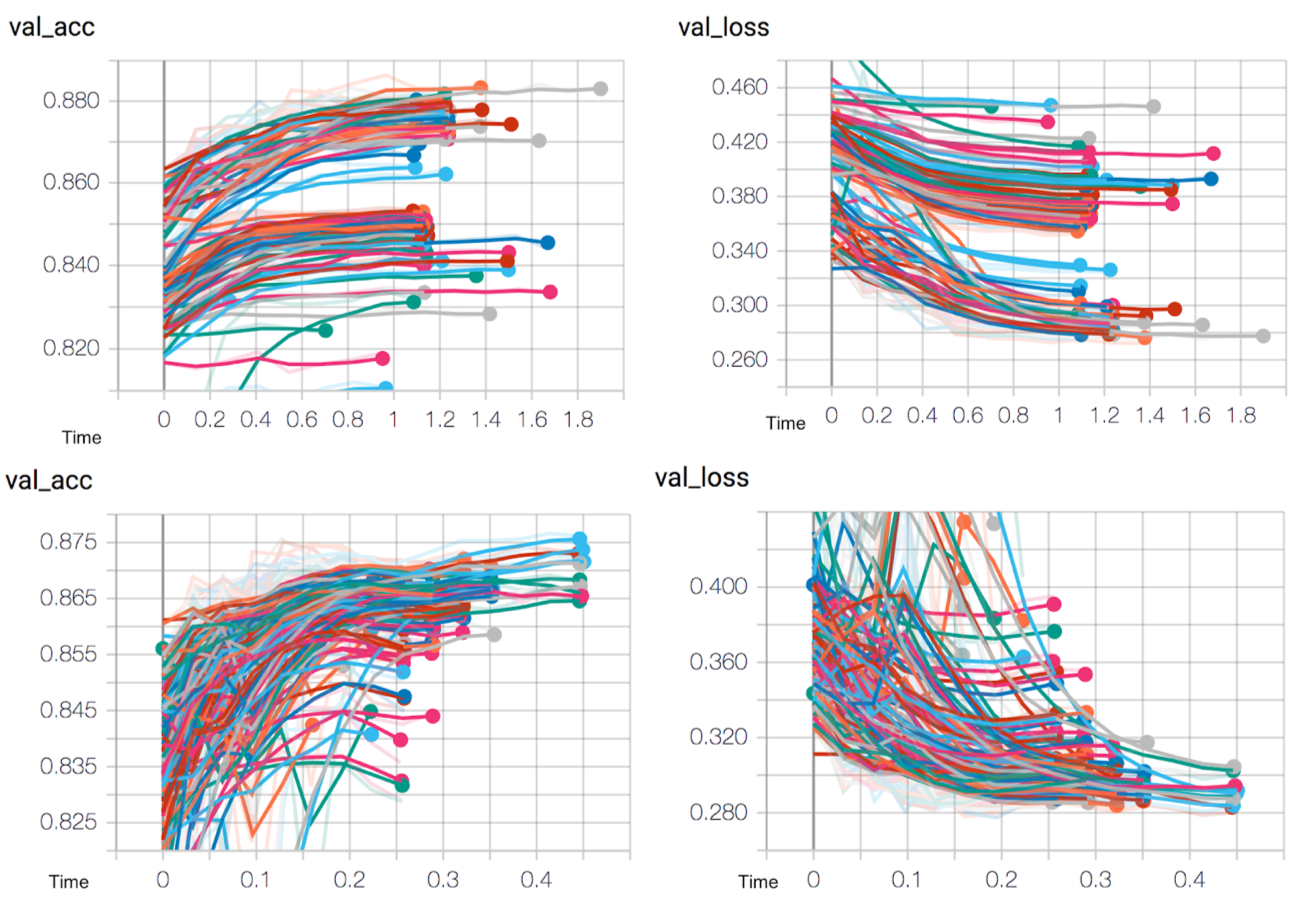

We trained about 200 model iterations on two separate AWS EC2 P3.2xlarge. They are AWS’s deep learning AMI machines that have deep learning virtual environment setup, e.g. python3 with Tensorflow GPU version pre-installed in our case, and ready-to-use. We found the best-performing model from MobileNetV2 with a validation accuracy of 0.88. However, Xception reached a validation accuracy of 0.89, and therefore, we picked the model trained with Xception. We packaged the best-trained Xception model with Tensorflow Serving. Tensorflow Serving helps to package the Keras and Tensorflow model as a a Docker image. The image can serve as an endpoint for large spatial scale model inference, which allows us to run model inference on tens of millions of image tiles per hour without manually watching the inference.